Global semantic 3D understanding from single-view high-resolution remote sensing (RS) imagery is crucial for Earth observation (EO). However, this task faces significant challenges due to the high costs of annotations and data collection, as well as geographically restricted data availability. To address these challenges, synthetic data offer a promising solution by being unrestricted and automatically annotatable, thus enabling the provision of large and diverse datasets. We develop a specialized synthetic data generation pipeline for EO and introduce SynRS3D, the largest synthetic RS dataset. SynRS3D comprises 69,667 high-resolution optical images that cover six different city styles worldwide and feature eight land cover types, precise height information, and building change masks. To further enhance its utility, we develop a novel multi-task unsupervised domain adaptation (UDA) method, RS3DAda, coupled with our synthetic dataset, which facilitates the RS-specific transition from synthetic to real scenarios for land cover mapping and height estimation tasks, ultimately enabling global monocular 3D semantic understanding based on synthetic data. Extensive experiments on various real-world datasets demonstrate the adaptability and effectiveness of our synthetic dataset and the proposed RS3DAda method.

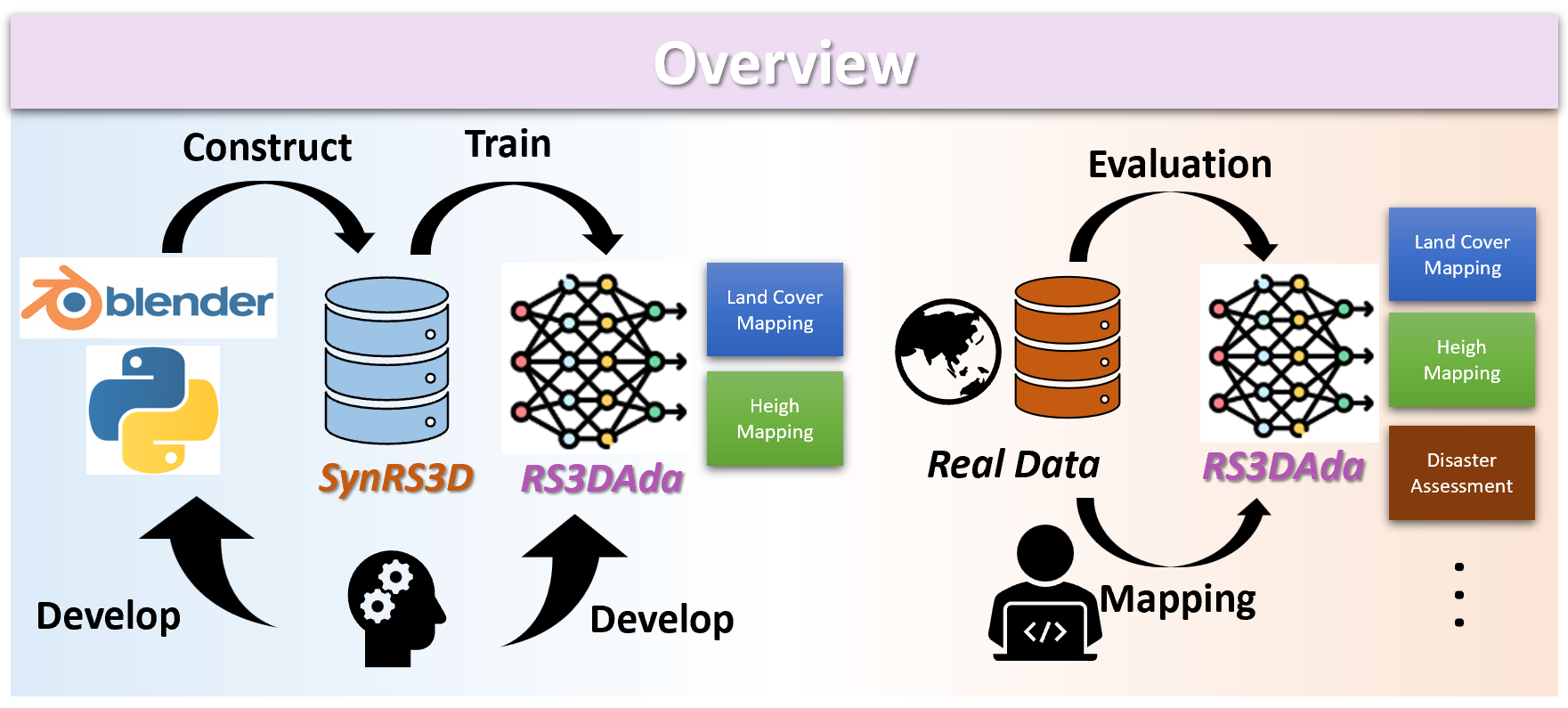

SynRS3D is the largest synthetic dataset for remote sensing, paired with RS3DAda, a multi-task UDA training method. The trained model can be applied to various real-world remote sensing applications.

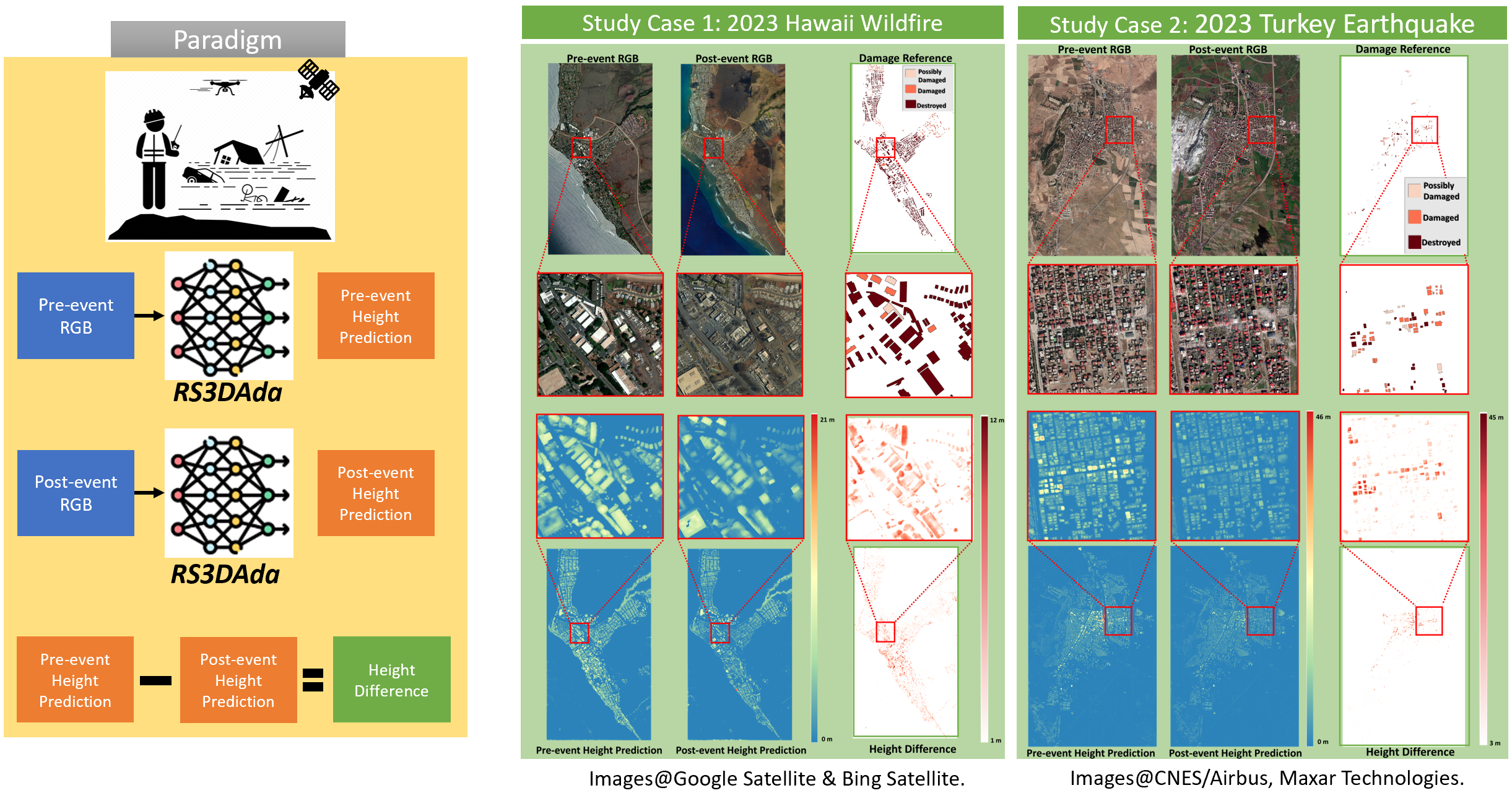

Using RS3DAda, we trained models on purely synthetic SynRS3D data to achieve global 3D semantic reconstruction based on real-world monocular remote sensing images.

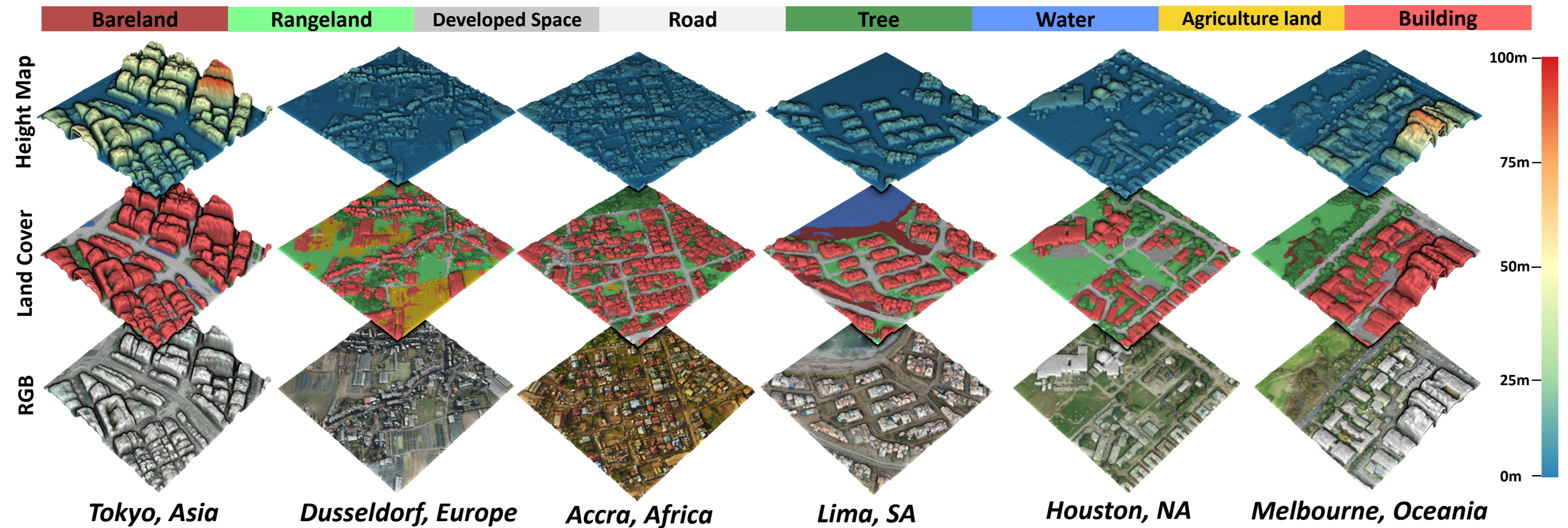

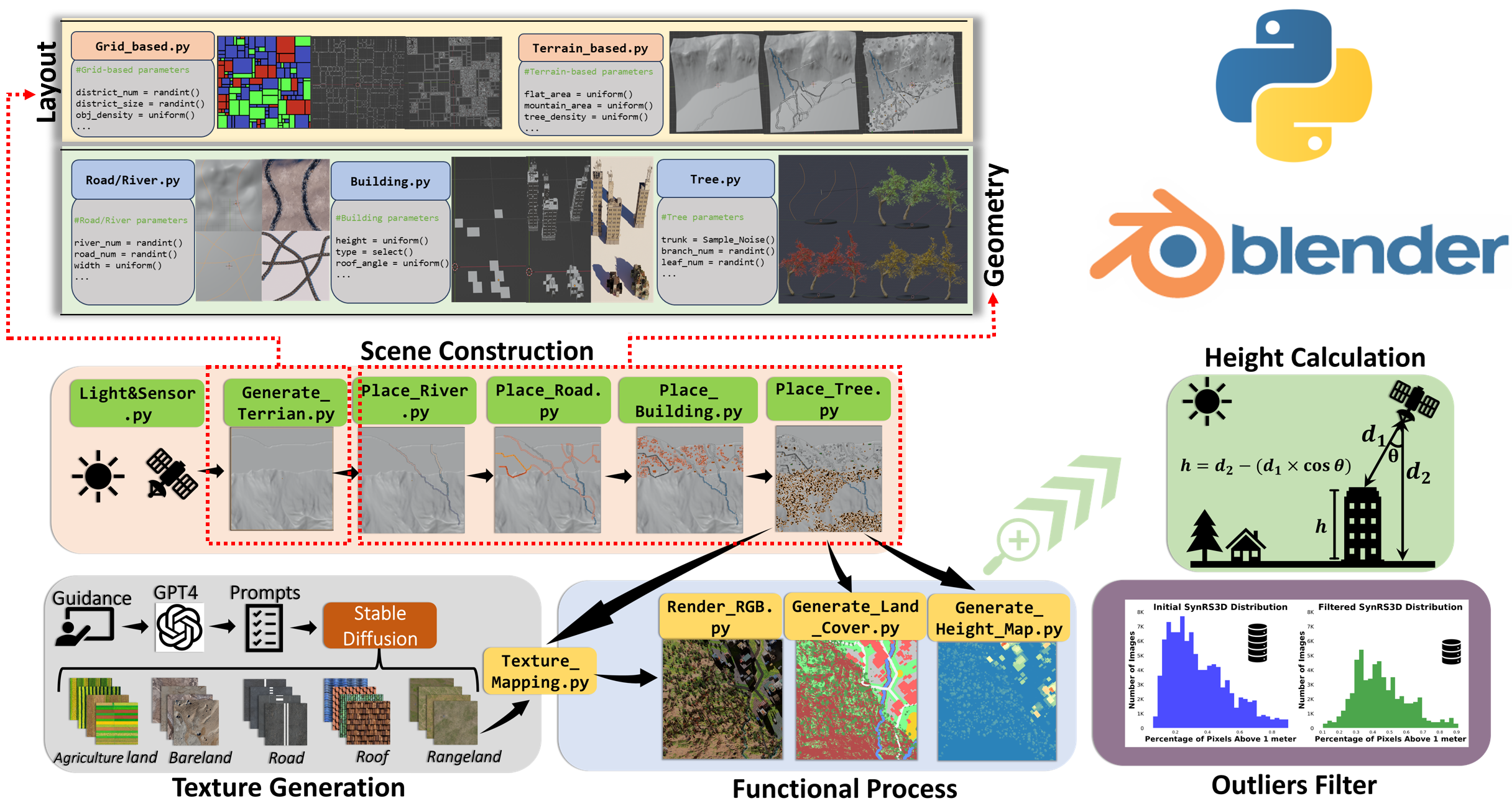

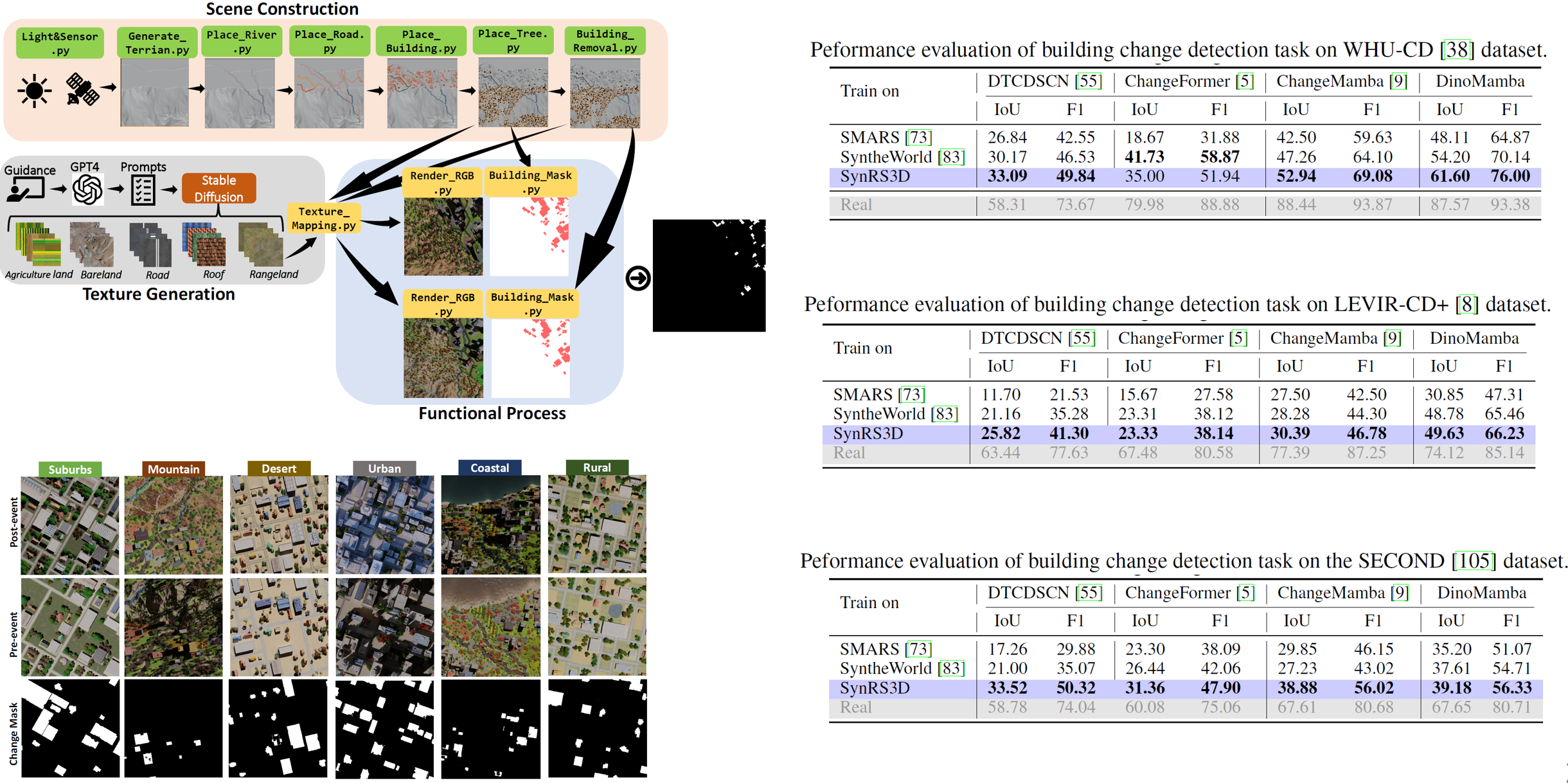

We built a procedural modeling system using Blender and Python, simulating six global urban styles, and programmatically generating RGB images, land cover annotations, and nDSM data.

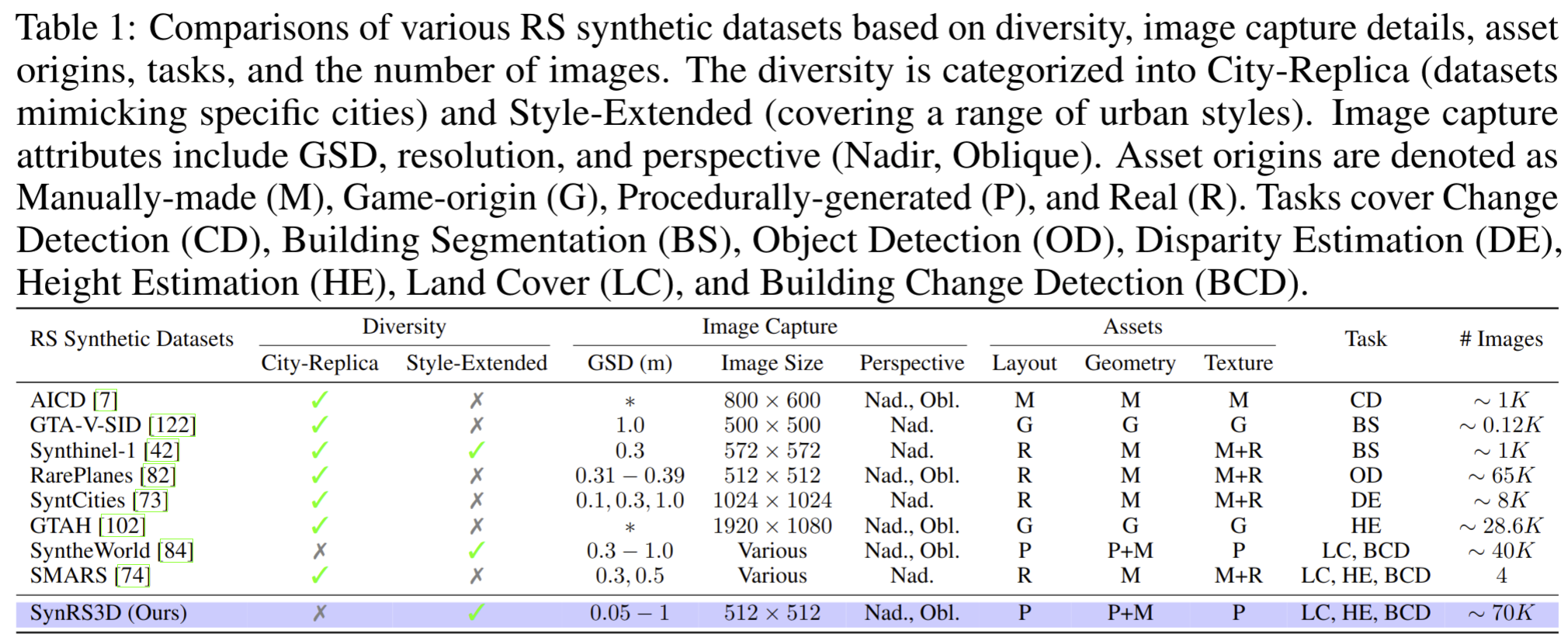

Comparison between SynRS3D and existing synthetic remote sensing datasets across various aspects, including diversity, image capture, assets, supported tasks, scale, and size.

Examples of SynRS3D data samples, including six urban styles (RGB, land cover, height map), along with statistical comparisons to other synthetic and real datasets.

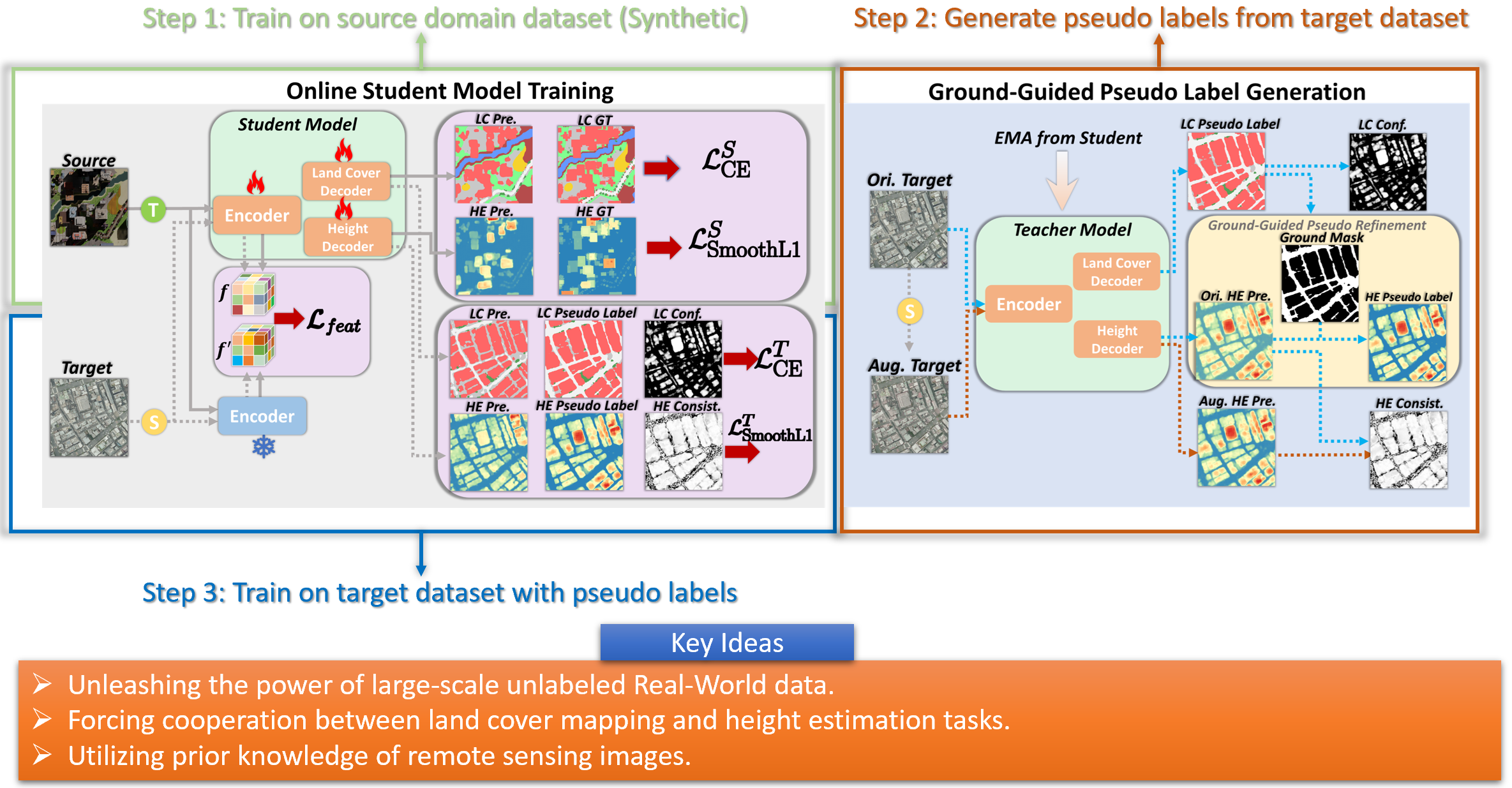

The RS3DAda framework is a multi-task UDA model based on a teacher-student training fashion, which is the first UDA model tailored to the remote sensing field for synthetic-to-real adaptation.

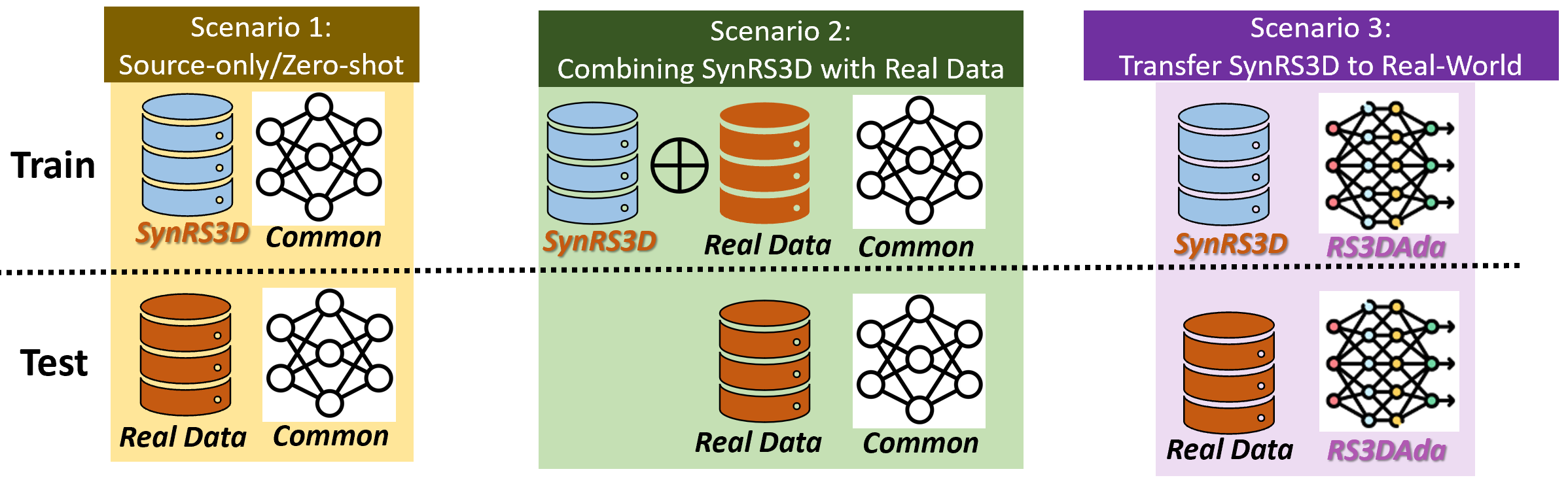

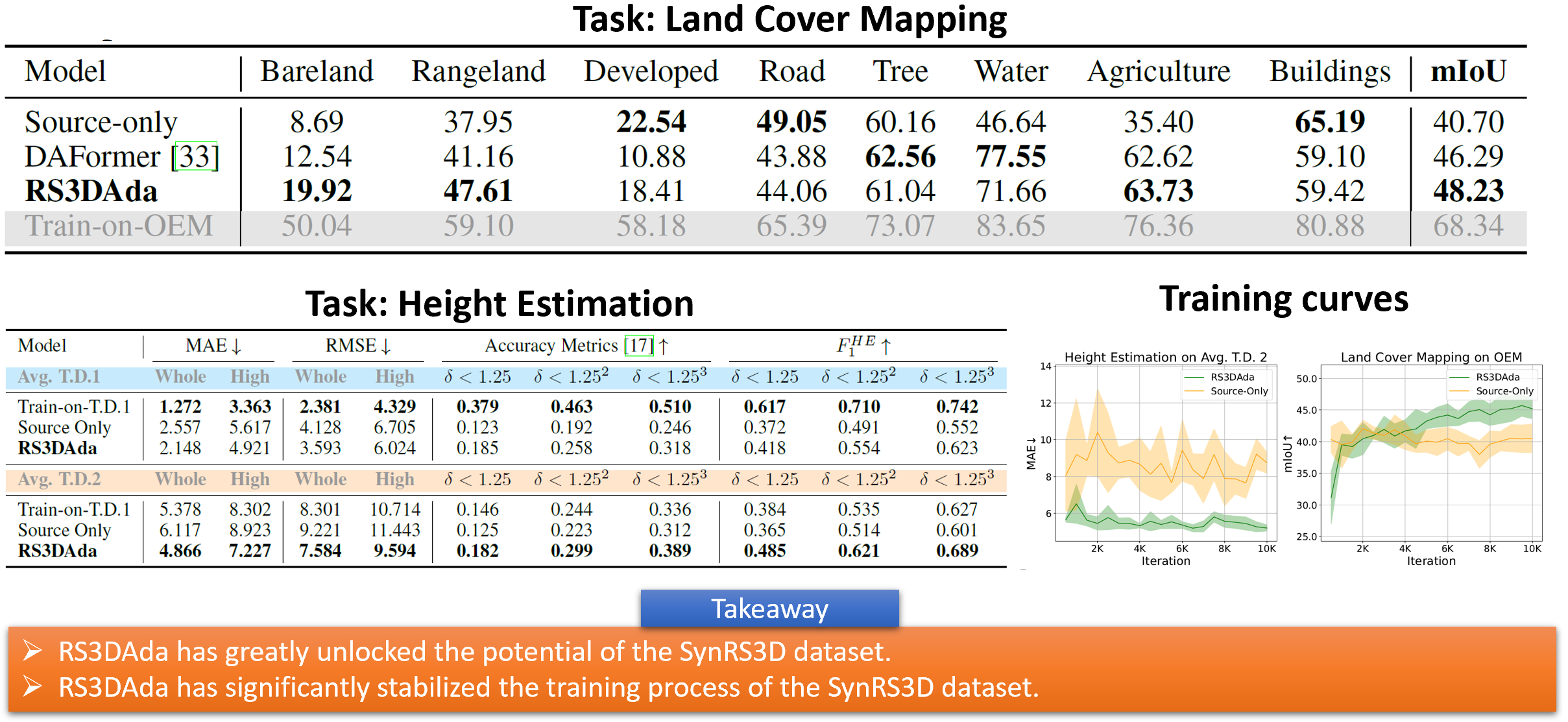

We have three experimental settings: (1) Source-only, (2) Combining SynRS3D with Real Data Scenarios, and (3) Transfer SynRS3D to Real-World Scenarios to verify RS3DAda's effectiveness.

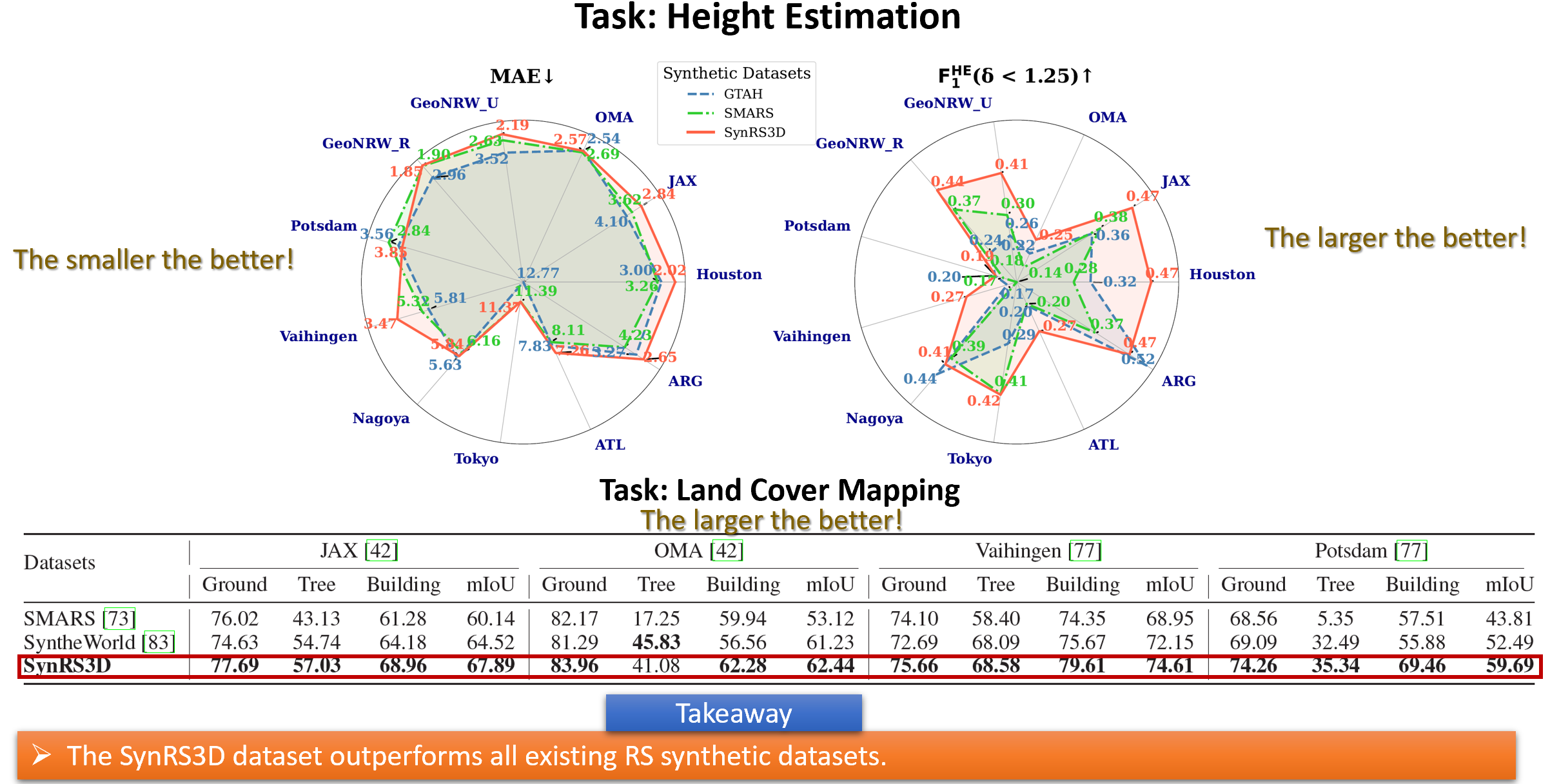

Results for Source-only Setting, highlighting the baseline performance using SynRS3D dataset.

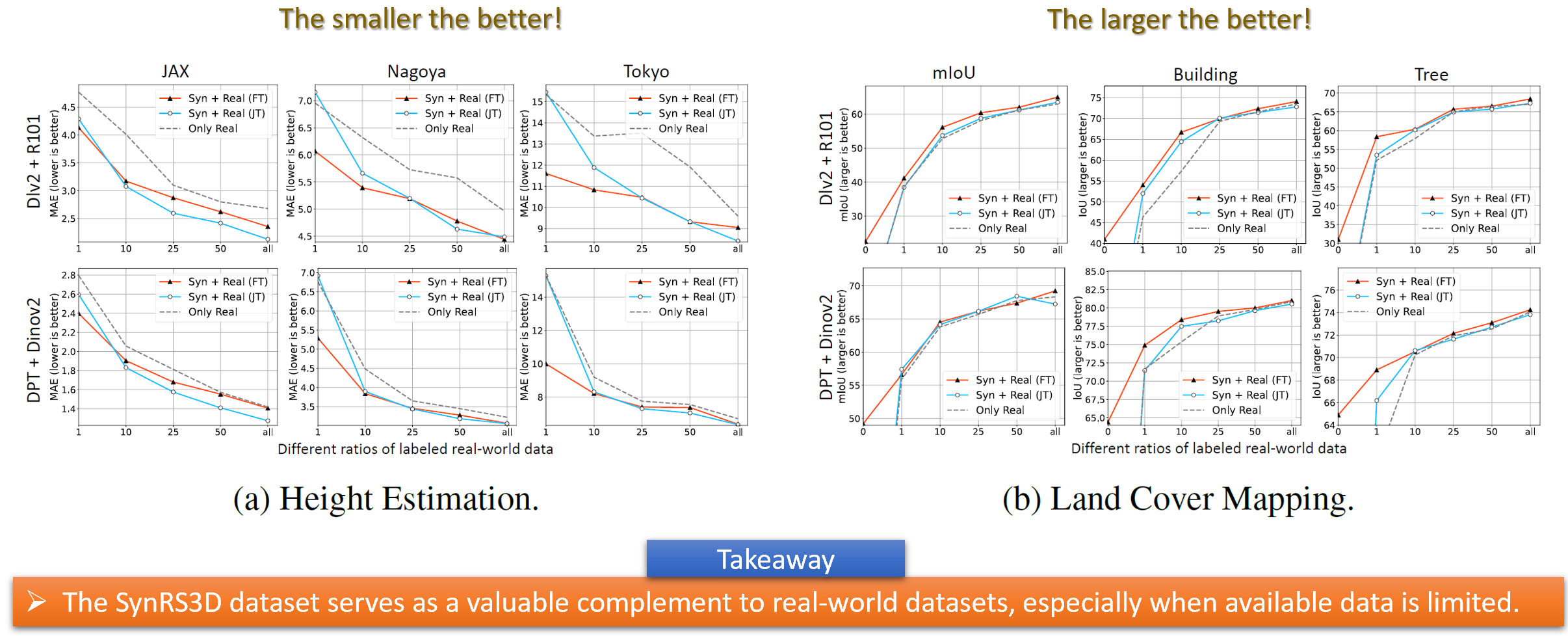

Results for Combining SynRS3D with Real Data Scenarios, showcasing the improvements made by using mixed datasets.

Results for Transfer SynRS3D to Real-World Scenarios, validating the effectiveness of RS3DAda for real-world applications.

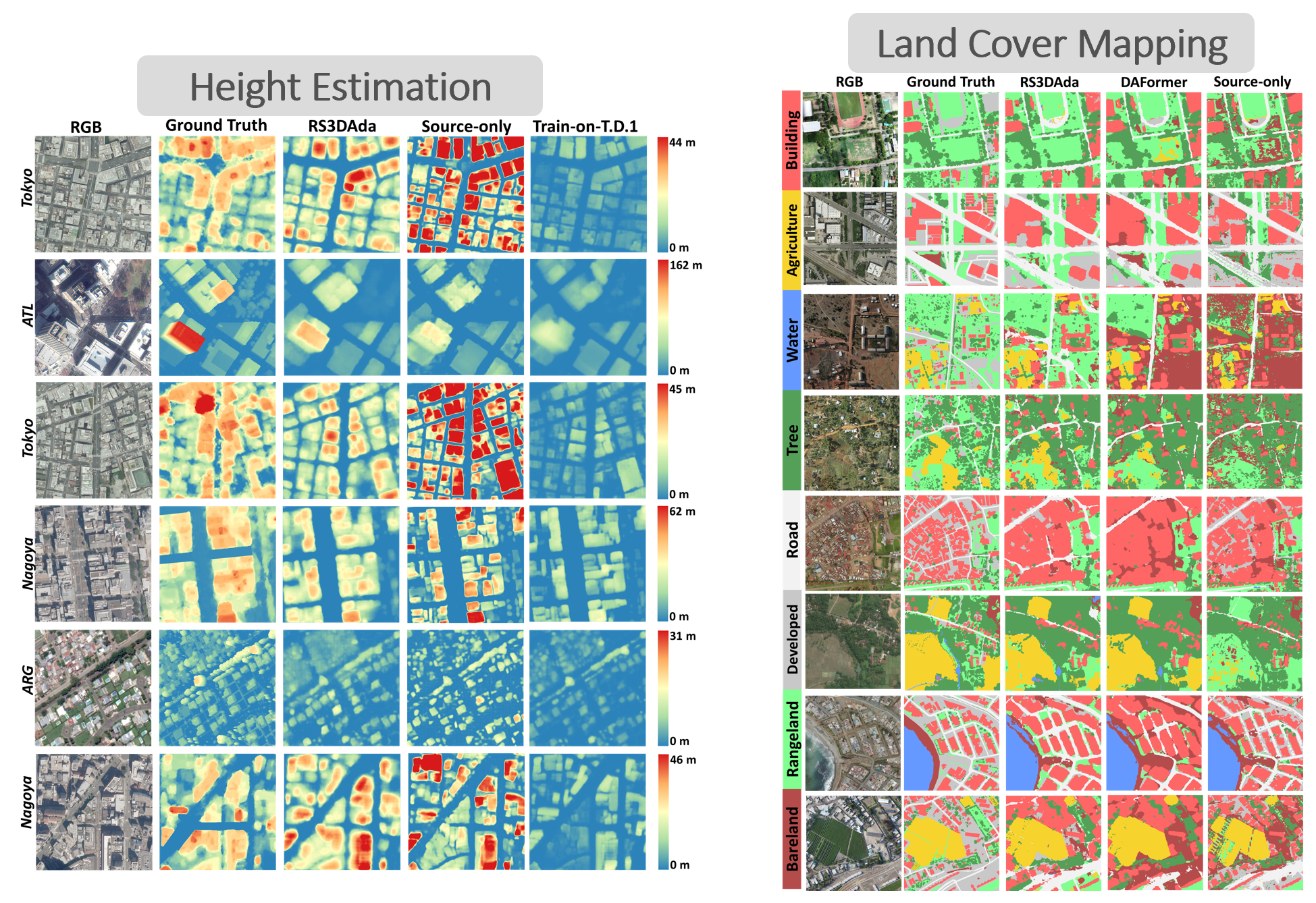

Qualitative results showing left: height estimation, right: land cover mapping tasks.

SynRS3D supports the detection of building changes over time, helping to monitor urban development and environmental changes effectively.

SynRS3D plays a crucial role in disaster mapping, providing timely and accurate data for assessing damages after natural disasters like earthquakes and cyclones.